DeepSeek V3 and R1: Innovative Architectures and Advanced Reasoning Capabilities in Open-Source LLMs

Introduction

Open-source models are rapidly transforming the AI landscape, offering compelling alternatives to closed-source models. DeepSeek's recent advancements, DeepSeek V3 and R1, showcase the potential of architectural innovation, efficient scaling, and reinforcement learning (RL) for reasoning to significantly enhance the capabilities of large language models (LLMs).

Key Architectural Innovations

DeepSeek’s advancements are built on core architectural innovations that enhance efficiency, scalability, and reasoning capabilities. These are innovative techniques shared across DeepSeek V3 and R1, forming the foundation for their training and inference efficiency:

Multi-Head Latent Attention (MLA)

Compress attention keys/values/queries into low-rank representation to reduce KV cache size, leading to more efficient inference and training.

Decoupled RoPE enables long-context extension from 4K to 128K tokens via positional interpolation.

Mixture-of-Experts (MoE)

Activates only 37B per token out of the 671B parameters, leveraging finer-grained experts control with shared experts and routed experts.

Proposes an auxiliary-loss-free load balancing technique, achieving better expert specialization and balanced token distribution without dropping tokens.

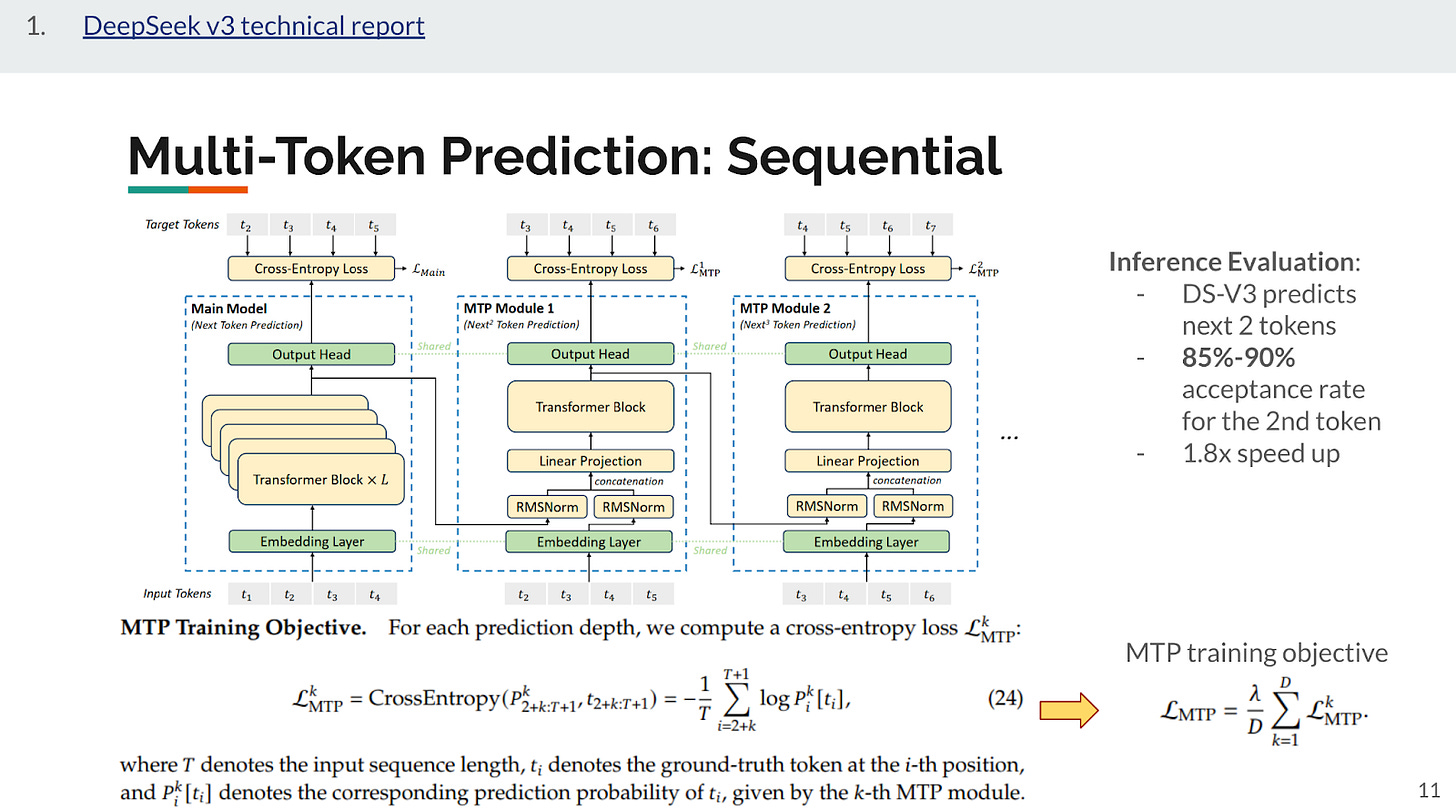

Multi-Token Prediction (MTP)

Extends prediction scope to multiple future tokens.

Improves training efficiency with additional objectives, and speculative decoding increases speed by 1.8x with only 2-token MTP.

Group Relative Policy Optimization (GRPO)

Simplifies PPO to optimize policy models with group samples instead of a heavy value model. Improves mathematical reasoning capability with less memory and compute.

Used in DeepSeek V3 for RLHF (alignment training) and R1 for reasoning development.

Mixed-Precision Training (FP8/BF16/FP32)

Uses FP8 for computation-heavy layers (such as GEMM kernels), reducing memory footprint.

Maintains BF16/FP32 for numerically sensitive components, preserving training stability.

Efficient and Scalable Training Infrastructure

Trained on 2048 Nvidia H800 GPUs using a custom 16-way pipeline and 64-way expert parallelism.

Achieves high efficiency at a fraction of the cost of proprietary models.

Model Evolution: From DeepSeek V3 to R1 and Beyond

Figure 1. Complete evolution of DeepSeek V3 and R1-related models from the DeepSeek V3-Base model

DeepSeek follows a structured, multi-stage process that builds upon the DeepSeek V3 base model to develop DeepSeek V3, R1-Zero, R1, and distilled models from R1 as illustrated in Figure 1. At high level, V3-base to V3 improves the standard fine-tuning process for chat applications, and V3-base to R1 series models focus on progressively refining reasoning ability while ensuring efficiency and generalization.

1. DeepSeek V3-Base: The Foundation for Scalable and Efficient Training

Trained with mixed precision (partial FP8) on 14.8T tokens of high-quality data.

Leverages MLA, MoE, and MTP to optimize training and/or inference.

2. DeepSeek V3: State-of-The-Art model for Chat Applications

Post-trained with supervised fine-tuning (SFT) on 1.5M samples of instruction dataset and RLHF with GRPO for alignment.

Outperforms other open-source models and is comparable to leading closed-source models. Generates non-reasoning training data for reasoning-focused training in R1.

3. DeepSeek R1-Zero: Reasoning Capabilities Emerge from Pure RL

Trained exclusively with RL (GRPO) and rule-based rewards, without any supervised fine-tuning.

Shows that structured reasoning can emerge purely from RL, but lacks fluency and coherence.

4. DeepSeek R1: Reasoning Model for ALL Scenarios in Real-World Usability

Uses a four-stage RL pipeline, integrating reasoning rewards, rejection sampling, and model-based preference learning.

Achieves o1 level performance with lower cost.

5. Distillation: Empower Small Models with Reasoning Capability

R1’s reasoning ability is successfully distilled into smaller models (e.g., Qwen-32B, Llama3-70B).

Distilled models retain reasoning performance while being more cost-efficient than direct training.

Note: This post expands on a slide deck that I presented in an internal GenAI review group for the summary of DeepSeek V3 & R1 models, and related 10+ papers. For folks who prefer detailed treatment, please read on.

Key Innovations behind DeepSeek V3 & R1

1. Multi-Head Latent Attention (MLA): Faster, Leaner Attention with Decoupled RoPE for Context Extension

Problem: Traditional transformers store large KV caches, leading to memory bottlenecks, especially during inference.

Solution: MLA reduces KV cache size (embedding dimension 7168 → 512), enabling faster inference with lower memory overhead.

Figure 2. Multi-latent attention and comparison of model capability and KV cache with other attention variants.

As illustrated in Figure 2, MLA directly compresses the attention keys and values into low-rank joint compression (cKVt) to reduce the memory requirement for KV cache during inference. This is critical to control the inference cost for increasing request volumes since a different KV cache is needed for each input request, especially so for reasoning models that rely on inference time scaling.

To further reduce activation memory during training, the queries are also converted into low-rank compression (cQt), although they do not need to be cached for inference. A followup research TransMLA [15] proves that MLA is a quite general technique, and Group Query Attention (GQA) used in many major models (LLaMA, Qwen, Mixtral) can be represented by MLA with the same KV cache efficiency.

Bonus: Decoupled RoPE improves inference efficiency by reducing intermediate results computation, and enables context length extension from 4K to 128K tokens dynamically.

RoPE provides relative positional embedding for tokens and is proven to be effective for context extension after models pre-trained with a shorter context like in Llama [10] and RoFormer [11]. With MLA, during the attention calculation in Equation 46 in Figure 2, if directly adding RoPE to the keys kCt like normal architecture, the WUK cannot be absorbed into WUQ during inference since the RoPE matrix lies between them and matrix multiplication is not commutative. As a result, we need to recompute the keys for all prefix tokens during inference, hindering inference efficiency.

As a solution, a decoupled RoPE strategy is proposed to use additional multi-head queries and shared key (kRt) to carry RoPE, which is concatenated with the keys (kCt) derived from the low-rank compression. With this, due to the associative law of matrix multiplication, we can absorb 𝑊𝑈𝐾 into 𝑊𝑈𝑄, and 𝑊𝑈𝑉 into 𝑊𝑂. Therefore, we do not need to compute keys and values out for each query. Through this optimization, we avoid the computational overhead for recomputing k𝐶𝑡 and v𝐶𝑡 during inference.

In addition to improving inference efficiency, decoupled RoPE also allows DeepSeek V3 to improve prior positional interpolation techniques to dynamically extend the model context from 4K to 128K in two phases. First from 4K to 32K, then 32K to 128K, and more details can be found in [7].

2. DeepSeek MoE – Smarter Scaling Without Waste

Scale Smartly: Instead of activating all 671B parameters, DeepSeek V3 only activates 37B per token, balancing efficiency and performance.

Load Balancing Breakthrough: No auxiliary loss— a bias-term-based mechanism ensures experts are efficiently selected without additional loss functions.

Figure 3. DeepSeek MoE and the auxiliary-loss-free load balancing strategy

DeepSeekMoE(Mixture of Experts) uses finer-grained experts selection strategy with shared experts and routed experts, and it is applied to all 61 FFN layers except the first three layers. Concretely, each MoE layer Each MoE layer consists of 1 shared expert and 256 routed experts, of which 8 experts will be activated for each token. Load balancing scheme is essential for MoE models to mitigate routing collapse. Instead of using conventional solutions that rely on auxiliary loss to avoid imbalanced load with some performance penalty, DeepSeek proposed an auxiliary loss-free load balancing strategy to achieve better trade-off between load balance and model performance. A complementary sequence-wise auxiliary loss is added to prevent extreme imbalance within any sequence with very small weight =0.0001.

As depicted in Figure 3, the bias term bi is introduced for each expert and added to the corresponding affinity score si,t to determine the top-K active experts for each token. During training, at the end of each step, the scheme decreases the bias term by 𝛾 if its corresponding expert is overloaded, and increases it by 𝛾 if its corresponding expert is underloaded, where 𝛾 is a hyper-parameter called bias update speed. During pre-training, It is set to 𝛾 = 0.001 for the first 14.3T tokens, and to 0.0 for remaining 500B tokens. Through the dynamic adjustment, DeepSeek-V3 keeps balanced expert load during training, and achieves better performance than models that encourage load balance through pure auxiliary losses. As shown in Figure. 4, further investigation of the expert load uncovers that the auxiliary-loss-free model shows greater expert specialization patterns than the auxiliary-loss-based one. The relative expert load denotes the ratio between the actual expert load and the theoretically balanced expert load.

Figure 4. Expert load of auxiliary-loss-free and auxiliary-loss-based models on three domains in the Pile test set.

3. Multi-Token Prediction (MTP) – Predicting More, Faster

Training Efficiency: Instead of generating one token per step, MTP predicts multiple tokens ahead, densifies training signals/objectives and potentially improves data efficiency.

Speculative Decoding: Multiple tokens are generated and verified asynchronously, achieving 1.8x speedup in inference with only 2-token MTP.

Figure 5. MTP in DeepSeek models and its benefits to improve training objectives and inference speed

MTP objective extends the prediction scope to multiple future tokens at each position. In contrast to the parallel MTP[5], DeepSeek chose sequentially to predict additional tokens and keep the complete causal chain at each prediction depth. Specifically, as illustrated in Figure 5, its MTP implementation uses D sequential MTP modules after the main model to predict D additional tokens. For each MTP module, its embedding layer is shared with the main model. During training, MTP provides additional objectives into the loss function and potentially improves data efficiency as well. With the MTP training loss and the complementary loss in MoE, the overall training loss for pre-training is below:

Figure 6. Three-step speculative decoding process: propose → verify → accept/reject

MTP can also speed up the inference process via speculative decoding, in both sequential and parallel architecture [5][8][9]. As detailed in Figure 6, the speculative decoding process consists of three main steps: Propose → Verify → Accept/Reject. In DeepSeek's model, the speculative decoding with only 2-token MTP achieves 1.8x speed up during inference with 85%-90% acceptance rate for the speculative second token. Other work also reports speed up from 2-5x with different numbers of speculative tokens.

4. Group Relative Policy Optimization (GRPO) – Stabilizing RL for Efficient Post-Training

Background: RLHF (Reinforcement Learning with Human Feedback) is still an active research area and is widely used for fine-tuning large language models. Proximal Policy Optimization (PPO) is a very popular algorithm for the RLHF process as depicted in the upper half of Figure 7. The PPO algorithm involves multiple models for reward and value estimation and here are the brief definitions for them to make sure everyone is on the same page.

Policy Model (actor): current LLM under training. After training, it can generate responses according to the action distribution implicit inside the model.

Value Model (critic): A model that is trying to estimate the long term reward given certain actions; evaluates action taken by the actor.

Reference Model : A frozen version of the original LLM you are training, usually after SFT. Used to compute KL divergence penalty to ensure the policy model does not drift far from the original SFT behavior.

Reward Model: The model that was trained on human preferences, and outputs scalar value for reward.

Figure 7. GRPO with simplified value estimation as compared to PPO

Problems: Although popular, PPO does have several challenges when applied for RLHF for LLMs such as memory and computational burden since there are 4 models, and difficulty to train accurate value models for token-level credit assignment while the reward model only gives reward at the last token of sequences.

Alternative Solution: GRPO improves PPO by removing the value model (critic) and directly using samples of a group of outputs per prompt to estimate the advantages for training. These design choices have helped alleviate the changes with PPO algorithms applied for LLM post-training.

✅ Remove value model to improve memory efficiency: By removing the critic, GRPO significantly reduces the number of parameters and memory footprint, which is particularly beneficial when fine-tuning large LLMs. Although some optimized PPO implementation also uses a shared based model for policy model and value model with two heads like multi-task learning (such as stable-baselines3), the memory efficiency improvement is modest in this case.

✅ Direct advantage estimation to simplify training pipeline: Instead of relying on a learned value model with bootstrapping and GAE, GRPO estimates advantages by comparing multiple samples per prompt, thus simplifying the credit assignment mechanism—albeit at a group (sequence) level rather than token level.

✅ Relative advantage computation to reduce variance through group sampling: By using multiple outputs for the same prompt and calculating the advantage as the deviation from the group average reward, GRPO mitigates the high variance associated with sparse, sequence-level rewards.

✅ Updates reference model iteratively: GRPO’s approach of periodically updating the reference model helps balance the need for a stable KL penalty with the requirement for the reference model to stay relevant as the policy evolves, which can be advantageous over a completely fixed reference model in certain settings. A related note on KL penalty to regularize the training process. Since the value model is removed in GRPO, an unbiased KL penalty estimator, guaranteed to be positive[17], is added directly in the main training objective.

❌ Increased per-step compute: Although GRPO reduces memory usage by discarding the value model, it introduces additional compute overhead per update step because it requires generating and scoring multiple completions per prompt.

Model Evolution and Insights in the Training Process

DeepSeek follows a structured, multi-stage process that builds upon the DeepSeek V3 base model to develop DeepSeek V3, R1-Zero, R1, and distilled models from R1 as illustrated in Figure 1. At high-level, V3-base to V3 improves the standard fine-tuning process for chat applications, and V3-base to R1 series models focus on progressively refining reasoning ability while ensuring efficiency and generalization. This section summarizes the model evolution process along with insights in post-training choices and key results.

1. DeepSeek V3-Base: The Foundation for Scalable and Efficient Training

Based on the key architecture innovations described above, the pre-training process creates DeepSeek V3 base mode with mixed precision (partial FP8 training) on highly-optimized infrastructures. Trained on 14.8T tokens of high-quality data, DeepSeek V3-base is a decoder-only model with 61 transformer layers and serves as the base model for the DeepSeek LLM model family. It integrates MLA, MoE, and MTP techniques to achieve:

High inference efficiency (MLA): estimated KV cache reduction is 98% with 128 heads compared to traditional MHA. MLA with decoupled RoPE also allows context length extension from 4K to 128K tokens.

Cost-effective scaling (MoE): Only 37B parameters are activated for each token out of the 671B total parameters, and achieve better expert specialization with proposed auxiliary-loss-free expert load balancing strategy.

High performance training and faster inference (MTP): better training performance with diverse training objectives from MTP. Also achieves 1.8x inference speed up with only 2-token MTP.

As shown in Table 3 of the DeepSeek V3 technical report [1], comparison among representative open-source base models, DeepSeek V3-Base achieves the best performance on most benchmarks, especially on math and code tasks.

2. DeepSeek V3: State-of-The-Art model for Chat Applications

Figure 8. Post-training process from DeepSeek V3-base to DeepSeek V3.

DeepSeek V3 is created with two post-training steps from the base model as depicted in Figure 8.

Supervised fine-tuning (SFT): trained for two epochs with an instruction-tuning dataset of about 1.5M samples.

RLHF with GRPO for alignment: RL uses rule-based reward for verifiable questions like math and coding, and model-based reward for questions with free-form ground truth answers (essentially follows the constitutional AI paradigm [18] by leveraging the voting evaluation results from V3 itself as reward information). Further, the reward model in GRPO is trained from a model checkpoint after the previous SFT step.

Comparisons between DeepSeek-V3 and other representative chat models are summarized in Table 6 of the DeepSeek V3 technical report [1]. All models are evaluated in a configuration that limits the output length to 8K. Benchmarks containing fewer than 1000 samples are tested multiple times using varying temperature settings to derive robust final results. DeepSeek-V3 stands as the best-performing open-source model, and also exhibits competitive performance against frontier closed-source models.

3. DeepSeek R1-Zero: Reasoning Capabilities Emerge from Pure RL

Figure 9. Post training process from DeepSeek V3-Base to DeepSeek R1-Zero.

Figure 10. Training template for DeepSeek-R1-Zero. prompt will be replaced with the specific reasoning question during training.

Unlike the V3 model that undergoes supervised fine-tuning before RL, DeepSeek R1-Zero is trained purely with reinforcement learning with no supervised data as shown in Figure 9. A rule-based reward system decides the optimization direction. Specifically, it includes accuracy reward to ensure response correctness , and format reward to enforce the model to put the thinking process between <think> and </think> tags. The training template is described in Figure 10, which requires DeepSeek-R1-Zero to first produce a reasoning process, followed by the final answer.

Surprisingly, it demonstrates that structured reasoning can emerge purely from RL as the example `aha moment` shown in Figure 11.

Figure 11. The "aha moment" recorded during the R1-Zero training process.

The model also achieves better than o1-mini performance in various benchmarks (Figure 13), but lacks fluency and coherence, requiring further training for practical usability, which is the goal of the R1 model.

4. DeepSeek R1: Reasoning Model for ALL Scenarios in Real-World Usability

Figure 12. Post training process from DeepSeek V3-Base to DeepSeek R1 with a 4-stage pipeline.

DeepSeek R1 builds upon V3-base with a four-stage post-training pipeline to further improve reasoning and align model better for general purpose scenarios:

Cold-Start SFT focuses on reasoning: trained on a few thousand samples to improve readability and formatting.

Reasoning-oriented RL (RoRL): Rule-based reward system with the same accuracy reward, format reward as in R1 Zero. To mitigate the language mixing issue, it introduces additional language consistency reward that is calculated as the proportion of target language words in the CoT.

After Step 2, an unnamed reasoning model checkpoint is created, detonated as the "RoRL checkpoint" in Figure 1. This checkpoint is used to collect SFT data for the subsequent step. The dataset contains reasoning data and no-reasoning data.

Reasoning data with 600k samples: it is created by performing rejection sampling from the RoRL checkpoint on many generated reasoning trajectories responding to the curated reasoning prompts. The dataset covers beyond verifiable questions evaluated using rule-based rewards. In those cases, the generative reward model is leveraged by feeding the ground-truth and model predictions into the DeepSeek V3 for judgment. The process samples multiple responses for each question and retains only the correct ones.

Non-reasoning data with 200k samples: it partially comes from the SFT dataset of DeepSeek V3, and the rest is generated by DeepSeek V3 model. In most cases except the real simple questions, the prompts request the V3 model to generate chain-of-thoughts before answering the questions.

After the creation of the SFT dataset with 800k samples, Step 3 starts from the V3-Base model, not the RoRL checkpoint.

General purpose SFT: Unlike the reasoning-focused SFT during initial cold-start, it enhances the model’s capabilities in writing, role-playing, and other general-purpose tasks.

Final RL for all scenarios: Similar to V3 pipeline, rule-based rewards for verifiable questions and model-based rewards for free-form questions to capture human preferences. Helpfulness checks focus on the final summary to emphasize utility and relevance of the responses while minimizing interference to the reasoning process. Harmlessness checks evaluate the entire response including the reasoning process and summary to mitigate any potential risks, biases, and harmful content.

As summarized in Figure 13, the resulting DeepSeek-R1 has a clear improvement on reasoning performance of DeepSeek-R1-Zero on various benchmarks, performing at the same level as the OpenAI o1 model. R1 also resolves the readability and usability issue as demonstrated in the pleasant experience of DeepSeek's chat application that has gained much popularity since launch.

Figure 13. Benchmark comparison of OpenAI o1 and DeepSeek R1 models (annotated from Table 2 in R1 paper [2])

5. Distillation: Empower Small Models with Reasoning Capability

Figure 14. SFT process to distill DeepSeek R1 into smaller models in Qwen and Llama model series.

This is an interesting take to create smaller models with reasoning capabilties. Given the popular post-training techniques, there are at least two approaches to achieve this:

Leverage RL like the development of R1-Zero and R1 model described above.

Distill knowledge from powerful reasoning models to smaller models as depicted in Figure 14.

The DeepSeek team explored both directions to understand the effectiveness of both approaches. Since there is no smaller base model in DeepSeek's model family, models from Qwen and Llama are chosen. Specifically, the chosen base models are Qwen2.5-Math-1.5B, Qwen2.5-Math-7B, Qwen2.5-14B, Qwen2.5-32B, Llama-3.1-8B, and Llama-3.3-70B-Instruct.

Figure 15 summarizes the effectiveness of related approaches with Qwe-32B-Base as base model. DeepSeek-R1-Zero-Qwen-32B leverages the same training strategy with only RL, trained for over 10K steps on math, code, and STEM data. The model achieves similar performance as QwQ-32B-Preview, proving the the only RL approach is effective to gain reasoning capability with smaller models as well.

However, DeepSeek-R1-Distill-Qwen-32B, which is distilled from DeepSeek-R1, performs significantly better than DeepSeek-R1-Zero-Qwen-32B across all benchmarks. This suggests distilling reasoning capability from powerful models into smaller models is more effective than gaining reasoning capability through large-scale RL directly with smaller models. The former approach achieves better performance with less compute power. Note that only SFT is used for distillation in this study, the performance could be further improved with SFT + RL for distillation with more compute power requirement.

The annotated performance for R1-Zero and R1 serves as a reminder about limitations of the distillations. Although it is both effective and economical, to achieve absolute best performance to push the boundaries may still need powerful base models with large-scale RL.

Figure 15. Comparison of reasoning capabilities for a smaller model (Qwen-32B) with different techniques (annotated from Table 6 in R1 paper [2] ).

At application domain, the effectiveness and lower cost of the distillation method to gain reasoning capabilities for smaller models are very promising. Many such smaller models will likely be created and deployed for applications that can be well-supported with a certain level of reasoning capability.

Discussions and Related Thoughts

1. Two interesting details about DeepSeek model evolution that may uncover more insights.

1.1. For the DeepSeek R1 development, why is DeepSeek V3-Based used as the starting point for Stage 3 instead of the RoRL checkpoint from Stage 2?

In Figure 12 that describes the 4-stage pipeline for R1 development, after the creation of the SFT dataset with 800k samples, Step 3 starts from the V3-Base model, not the RoRL checkpoint. I am not aware of the exact reason, there are two potential explanations:

On the one hand, it could be simply that both are tried and the final model performs better on benchmarks with V3-Base as starting point, or the RoRL checkpoint as starting point performs much worse in early training or learning rate annealing analysis so it did not even finish the whole training.

On the other hand, it could be that the RoRL checkpoint is indeed only designed as a data generator to get a better CoT dataset. If we follow this logic, it seems that we could get a higher quality dataset by performing the first two stages (SFT + RL) multiple times with progressive difficulties in questions before the final fine-tuning to get R1. As a result, the R1 performance could be improved further. In fact, rStar-Math [19] tried a similar approach to help small LLMs master math reasoning. It includes four-round of self-evolution with each round using a dataset created from a model checkpoint from the previous round and the starting point of each round is the initial model.

1.2. According to the model evolution process (summarized in Figure 1), V3 SFT data relies on the R1 model to generate reasoning data (Page 4 and 28), while R1 also leverages CoT prompts to V3 to generate non-reasoning data for SFT in Stage 3 of the 4-stage pipeline (Page 11).

This interdependence is interesting and is perfectly reasonable since V3 and R1 are likely being developed in parallel after V3-base models have been pre-trained, and reasoning and non-reasoning capabilities could be bootstrapped (mutually enhanced) this way. Although the papers do not touch on them, details about the following questions could give more insights about the post-training and model evolution process:

What is the nature of the bootstrap process? From papers' description, this interdependence on data generation is likely just a single exchange, not an iterative loop. This arrangement will help minimize the risk of reinforcing errors or overfitting.

What is the relative development order of V3 and R1 models and who finishes training first? Given that the V3 model was released earlier, it is natural to assume that V3 finished training first. However, the V3 model relies on the R1 series of models for reasoning data during SFT, so R1 must have been in a relatively mature stage to provide such data. One possibility is that the R1 model used to generate the reasoning data for V3 is the RoRL checkpoint in Figure 1. This is the model after the end of the second stage of the 4-stage pipeline for R1 training.

However, if it is the R1 model that finishes training first. The papers do not have enough detail to provide an educated guess about which V3 model checkpoint is used to generate some non-reasoning data for R1 through chain-of-thought prompting.

2. Techniques to gain reasoning capabilities

There are three approaches described in the model evolution section above for gaining reasoning capability:

Only RL for R1-Zero

SFT + RL for R1

SFT (distillation) for smaller models

Any other approaches?

The paper mainly focuses on training and data preparation strategies to create reasoning models. Inference-time scaling (or test-time computation scaling) is another dimension to expand reasoning models capabilities [12][13]. Intuitively, humans think longer for difficult problems to improve our decisions. The R1-Zero training process also demonstrates that the LLMs also gain similar behavior to allocate more compute resources (have a longer thinking process) for harder questions as training progresses. Inference-time scaling aims to answer this question: can we enable LLMs to most effectively make use of additional computation at test time to improve their responses?

This is an active new research area with many unknowns. There is no clear winning approach and many methods also have limitations. We will briefly touch two approaches: Process Reward Mode (PRM) and Monte Carlo Tree Search (MCTS). Note that these two approaches can be used in both training (RL-style training described above) and inference to improve reasoning models.

PRM is a learned model (reward or value model) that can evaluate not just final results, but also the intermediate steps or partial solutions leading up to the final answer. In other words, PRM can look at how a solution is being derived step by step and provide reward for each step. Best-of-N, beam search, and lookahead search are three common algorithms that can leverage PRM to make decisions and [12] has summarized the algorithms well in Figure 2 of the reference captured below.

DeepSeek R1 model attempted PRM for training but does not use that in the model for three challenges: hard to explicitly define a fine-grain step in general reasoning; determining whether the current intermediate step is correct automatically is challenging; and reward hacking. It also points out that the advantage of PRM is limited due to the additional computation overhead it introduces during the learning process in R1 experiments.

Similarly, a recent paper from Qwen team [18] also confirms that incorrect reasoning steps in the chain-of-thoughts data used in finetuning is common as shown in the figure above (Figure 6 in the reference paper). And [18] mitigates this situation by developing a consensus filtering mechanism that effectively integrates Monte Carlo estimation with LLM-as-a-judge, and advocates a more comprehensive evaluation framework that combines response-level and step-level metrics. So PRM still has the potential to improve LLM reasoning, but more research is needed to reach an effective solution.

Further, although the R1 model does not use a PRM directly in the training process, the PRM technique could be implemented at the application layer to allocate more compute for harder problems and the chat application might have that to improve the reasoning capability.

MCTS uses an exploration–exploitation balance (e.g., UCB formula) to decide which branch to expand at each iteration, focusing computational budget on more promising regions of the tree over time. The main difference is that MCTS has an adaptive rollout strategy, compared to simple "sample-and-score" or "expand-and-score" in PRM.

The MCTS with a good value model is critical to the success of AlphaGo and AlphaZero, and R1 also explored using MCTS to enhance test-time compute, but found that hard to make a practical solution for two reasons: unlike the board games that have a relatively well-defined set of moves, token generation presents a much larger search space with large branching factor, leading to computation challenges; the far less constrained "environment" for language tasks also makes it difficult to training a good value model.

There is a lot of active research on the topic about reasoning models. The community is likely to find some mitigation to these issues or find new approaches to gain reasoning capability with LLMs.

3. What do DeepSeek's advances mean for open-source models?

DeepSeek’s release of the V3 and R1 models is a landmark moment for the open source community. These models push the boundaries of what is achievable with open source AI, demonstrating that high-caliber performance, scalability, and robustness can be attained without proprietary constraints. They not only set a new benchmark for open source models but also serve as a powerful signal that community-driven innovation can rival, and in some cases surpass, commercial offerings. The advancements in architecture, efficiency, and capability embodied in these models are likely to spur a wave of experimentation and development across various applications.

Moreover, the DeepSeek V3 and R1 models underscore the transformative power of open collaboration in AI research. DeepSeek's development benefited a lot from open source as described in the key architecture innovations section. By making these state-of-the-art tools available to everyone, DeepSeek is democratizing access to cutting-edge technology, which is critical for leveling the playing field. Researchers, startups, and hobbyists alike now have the opportunity to build on a robust foundation, fostering an ecosystem that thrives on transparency, shared knowledge, and continual improvement. This release not only paves the way for more rapid progress in AI innovation but also reinforces the value of open source initiatives in driving inclusive and diverse technological advancements.

References

DeepSeek v3 Technical Report. DeepSeek Team, 2023.

URL: https://arxiv.org/abs/2412.19437DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning. DeepSeek Team, 2023.

URL: https://arxiv.org/pdf/2501.12948v1Shao Z., et al. DeepSeekMath: Pushing the Limits of Mathematical Reasoning in Open Language Models (GRPO), 2023.

URL: https://arxiv.org/pdf/2402.03300v3Dai D. et al. DeepSeekMoE: Towards Ultimate Expert Specialization in Mixture-of-Experts Language Models, 2023.

URL: https://arxiv.org/abs/2401.06066DeepSeek-V2: A Strong, Economical, and Efficient Mixture-of-Experts Language Model. DeepSeek Team, 2023.

URL: https://arxiv.org/abs/2405.04434F. Gloeckle, et. al. Better & Faster Large Language Models via Multi-token Prediction, 2023.

URL: https://openreview.net/pdf?id=pEWAcejiU2B. Peng, et al. YaRN: Efficient Context Window Extension of Large Language Models, 2023.

URL: https://arxiv.org/pdf/2309.00071v2M. Stern et al. Blockwise Parallel Decoding for Deep Autoregressive Models, 2023.

URL: https://arxiv.org/abs/1811.03115Chen C. et al. Accelerating Large Language Model Decoding with Speculative Sampling. DeepSeek Team, 2023.

URL: https://arxiv.org/abs/2302.01318Chen S. et al. Extending Context Window of Large Language Models via Positional Interpolation, 2023.

URL:https://arxiv.org/abs/2306.15595Su, J., Lu, Y., Pan, S. et al. “RoFormer: Enhanced Transformer with Rotary Position Embedding.” arXiv preprint arXiv:2104.09864, 2021.

URL: https://arxiv.org/abs/2104.09864Scaling LLM Test-Time Compute Optimally can be More Effective than Scaling Model Parameters. DeepSeek Team, 2023.

URL: https://arxiv.org/abs/2408.03314Learning to reason with LLMs, OpenAI, 2023.

URL: https://openai.com/index/learning-to-reason-with-llms/Meng, F. et al. “TransMLA: Multi-Head Latent Attention Is All You Need.” Tech. Rep., 2023.

URL: https://arxiv.org/abs/2502.07864Schulman, J., Wolski, F., Dhariwal, P. et al. “Proximal Policy Optimization Algorithms.” arXiv preprint arXiv:1707.06347, 2017.

URL: https://arxiv.org/abs/1707.06347Schulman, J. “Approximating KL divergence.” 2020.

URL: http://joschu.net/blog/kl-approx.htmlBai, Y., Jones, A., Lukosiute, K. et al. “Constitutional AI: Harmlessness from AI Feedback.” arXiv preprint arXiv:2212.08073, 2022.

URL: https://arxiv.org/abs/2212.08073Zhang, Z. et al. “The Lessons of Developing Process Reward Models in Mathematical Reasoning.” Tech. Rep., 2023.

URL: https://arxiv.org/pdf/2501.07301v1Guan, X. et al. “rStar-Math: Small LLMs Can Master Math Reasoning with Self-Evolved Deep Thinking.” Tech. Rep., 2023.

URL: https://arxiv.org/pdf/2501.04519v1